Autonomous Cars & Cognitive Brain Science: Is There a Missing Link?

This article originally appeared on AutoVision News. It has been republished with permission.

“Consider the hallmarks of human intelligence,” writes Dr. Mónica López-González in her whitepaper published by the Society for Imaging Science and Technology. “Overcome by computers in speed, accuracy, and precision, we continue to surpass computers in our capacity to generalize, learn, and manipulate and integrate multiple streams of known and novel information. Zooming into the issue of data efficiency, one of the biggest challenges in Cognitive Science is modeling how our minds do so much cognitively despite minimal amounts of information.”

Or, as Dr. López-González will often say in her presentations: humans are very good at improvising. “So how is it that we humans improvise? What allows us to improvise so successfully in an environment,” she continued. “If we can understand the nitty-gritty of what’s involved in motion, knowledge, and information, then we can create a mathematical system that can mimic that.”

Dr. López-González, CEO and Chief Scientific Officer of La Petite Noiseuse Productions is a cognitive brain scientist and linguist by training. “I’m really interested in the big question of human intelligence,” she explained. “And by human intelligence, I mean all the aspects involved in how and why we’re capable of maneuvering and surviving in the world or exacting change in the world.”

Examining The Checklist

The arrival of autonomous cars will enact a change in our world. Some changes may be harder to quantify at this exact moment, such as how the advent of self-driving cars will ultimately impact national infrastructure, local economies, or the legal system. Other changes seem more manageable to grasp right now, like how the current advancements in mobility are leading to a future with fewer fatal traffic accidents. Suffice it to say, as we move up through the SAE levels of automation, our world will change.

Perhaps a good strategy is to examine a change most everyone can universally agree on: that if self-driving cars (or even next-gen ADAS technology) could reduce fatal traffic accidents, that would be a good thing. With that goal in mind, maybe the best course of action is something the autonomous vehicle community is already quite good at doing: examining the capabilities of current technology and setting new benchmarks to achieve higher levels of performance.

The level of openness and willingness to collaborate in the self-driving car industry is tremendous. Companies continue to work together to deploy new mobility solutions. Consumer advocacy organizations are calling for more precise guidelines and definitions. The industry’s top conferences have moved to virtual platforms to accommodate the engineering community during COVID-19. Despite the uncertainty of the pandemic, good things continue to materialize in the way of future mobility.

The question now is how to best capitalize on that momentum. Perhaps now is the time to look at our “checklist” and take inventory as an industry. Perhaps now is a good time to ask if we have all the disciplines and skillsets necessary to usher in the safer roads we all envision.

Vehicle Perception vs. Vehicle Cognition

Dr. López-González and her research needs to make that checklist. Her education, background, and professional experience allow her to view self-driving technology through different lenses. Her vantage point is unique because it identifies gaps that are necessary to close if we want robust and safe automated driving systems. Dr. López-González is standing on a different hill in so many words, and as a result, she can view the landscape below differently.

“We’ve been systematically researching since the birth of cognitive science in the 1950s,” she said. “I think we need to take all of that research and put it together with the autonomous vehicle world and come up with a map for the future.”

The industry has worked diligently thus far to incorporate multiple disciplines of engineering and computer science. Now we are adding one that takes into consideration the depth of the human psyche. After all, we will eventually be asking a machine to drive, which has been a remarkably human behavior and activity for the last 120 years.

“We shouldn’t be talking about vehicle perception exactly, but rather vehicle cognition,” Dr. López-González said. “We have arrived at an amazing point in AI history, where we can and should close the gap between engineering, policymaking, and cognitive science.”

Cognitive Brain Science Is The “Glue”

Dr. López-González describes cognitive science as the “glue” between disciplines of the mind and brain. During her presentations, Dr. López-González often shows a graphic that links cognitive science with philosophy, psychology, computer science, neuroscience, linguistics, and anthropology. Cognitive science is about examining and assimilating the individual questions, theories, and methods from the aforementioned disciplines. Likewise, there is a strong link between cognitive science and the arts (Dr. López-González will frequently cite the work of Spanish artist Salvador Dalí and Czech writer Karel ?apek as examples).

“The arts are one of the highest levels of human intelligence because it involves things like emotion, language, body behavior and gestures, and our culture in general,” said Dr. López-González. “We can use the arts as a scientific and empirical platform to test questions about improvisation and adaptability in real-time and apply that to machine intelligence.”

Just as jazz musicians will improvise during a Saturday night performance at the club, we humans may need to improvise on a Monday morning commute to the office. An unexpected construction zone, bad weather, or another erratic motorist may require improvisation behind the wheel. The question is how an autonomous car will handle these and other similar (and likely sudden) changes in the operating environment that require human drivers to “think on their feet.”

“AI systems lack awareness and common sense, and they lack the ability to form and extract meaning and value from the context in pursuit of a changing goal that has future consequences,” explained Dr. López-González. “What this essentially means is these systems are not good at dealing with new and unknown situations.”

More Than Random Shapes

Dr. López-González encourages us to think of one scenario we have become accustomed to these days: the video conference call. She explains that when the video starts, we need very little contextual information to understand what is happening. We instantly recognize our colleagues and their home office and easily decipher any objects in the background. Even if the scene is different – say your colleague has a digital banner, or maybe a new shirt on, we still recognize them instantly. Different clothing or rearranged furniture in their office does not affect our brains: we still know it’s our colleague.

This is because we don’t see, as Dr. López-González explains, these things as mere shapes. “You’re not just looking at a circle, which would be their head, let’s say an oval circle, and then a square, let’s say, which would be their body,” she said. “Instead, you’re able to identify the people on the call as human beings, and you can quickly see they are in a room with things behind them because you’re putting together what we call ‘top-down’ information.”

By contrast, autonomous cars excel at disseminating bottom-up information. Dr. López-González says this bottom-up information is best demonstrated in scene perception and object classification. Machine vision systems will identify various objects in a scene, denoting them with squares and rectangles. She explains that ADAS technologies are quite good at integrating all types of bottom-up information when perceiving a given scene: lines, curves, lights, trees, pedestrians, and other vehicles, for example, are all easily identifiable by today’s machine vision systems.

“But what makes us intelligent is that we’re integrating all those lines and shapes and circles with knowledge of what a human being is; what an animal is, what another car is, and the behavior of how each of them will walk, or run, or move,” Dr. López-González continued. “That’s the top-down information.”

Put another way, bottom-up information shows the what but top-down information is about the why and how. “We cannot forget the reason why you know what’s in that square is a fire hydrant versus a human being, versus a tree or a dog, is because you have knowledge about the world,” Dr. López-González said. “And that’s what the machines lack. They don’t have knowledge about the world like that. They can’t give meaning to all those squares that are being identified.”

Brain-Inspired Computing

To address this, Dr. López-González refers to a discipline known as brain-inspired computing. “These algorithms cannot infer anything because they cannot transfer knowledge from one domain to another,” she explained. “We do this instantly in microseconds. That’s human intelligence. The brain-inspired computing question says we need to understand human intelligence and higher-order thinking capacities so that we can then begin to make predictive type mathematical models that could be brought into the discussion.”

Cliché as it may sound, the human brain is still the most powerful tool in our arsenal. Our brains can create sonnets and symphonies, supercars and skyscrapers. We can reason, channel emotion, and absorb new concepts from the time we are children. If necessary, our brains can even develop new neural pathways to reverse the damage done from trauma and addiction.

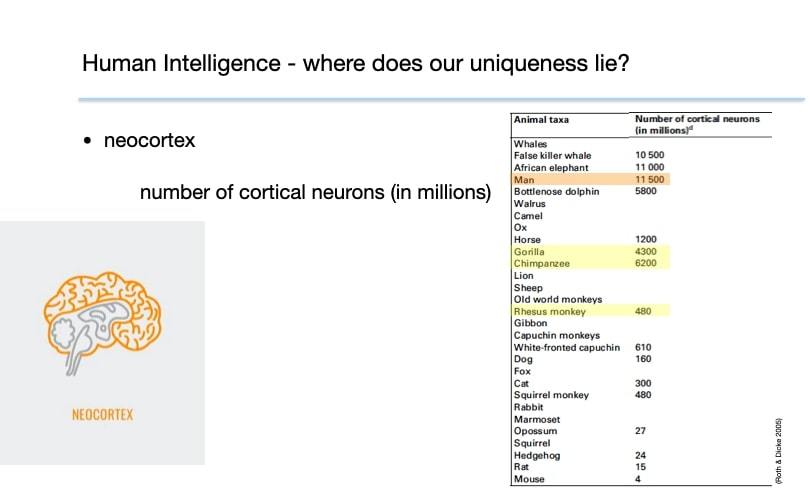

It may seem natural to say our brains can function the way they do because of their size, although sperm whales and elephants have larger brains than humans. Dolphins are pretty close to humans in brain size, but intelligent as dolphins are, they haven’t managed to colonize an entire plant. Instead, the advantage our brains have is in the number of cortical neurons, as outlined in the chart provided by Dr. López-González. In so many words, our brains can “fire” at a higher density versus other animals, which explains why we are capable of such high levels of creative and intellectual thought. It also explains why, as humans, we can think on the fly better than machines can.

Minimal Space & Maximum Functionality

Our brains are somewhat similar to a modern car transmission in terms of space and functionality. When Chevrolet engineers were developing the 10-speed Hydra-Matic transmission for the 2017 Camaro ZL1, their goal was to replace the previous eight-speed unit. The challenge was keeping the new Hydra-Matic architecture roughly the same size as the previous eight-speed, despite having two more forward gears. Increasing the size of the transmission would add complexity and weight, a counterintuitive proposition for a sports car that would eventually set a personal best on the Nürburgring a year later in 2018.

Instead, Chevy engineers designed four simple gearsets and six clutches: two brake clutches and four rotating clutches, just one more clutch than the previous eight-speed, despite having two more forward gears. The changes allowed the new Hydra-Matic to grow in terms of performance, not size. Where eight gears once lived, there were now 10. The benefits of the additional gears in the same space would increase the Camaro ZL1’s track presence and fuel efficiency. Likewise, the upgraded transmission included a new electronic control system and performance calibrations.

Our brains are similar in that our “forward gears” and “performance calibrations” are always confined to the same space. “Our brains aren’t getting bigger, yet we are capable of intelligent behavior,” said Dr. López-González. “We need to take advantage of this minimal space that still functions at maximum capacity. And that is what I think machine intelligence could actually be, and that’s part of the brain-inspired computing approach.”

Arriving At The Table

The Gartner Hype Cycle is often referenced in the ADAS and engineering community as a representation of where we are right now in terms of autonomous driving. As we transition from the Trough of Disillusionment and into the Slope of Enlightenment, there is ample opportunity to merge cognitive brain science with the engineering disciplines required for the design and development of future mobility solutions.

This is, as Dr. López-González suggests, the time to “pump the brakes,” and make sure we understand the critical differences between vehicle perception and vehicle cognition. Perhaps now is the time to consider that machine intelligence may benefit from a dose of human intellect by way of brain-inspired computing.

“Imagine if we had everybody sitting around a table talking about this,” Dr. López-González said. “I think we’ll get the answer.”

Carl Anthony is Managing Editor of Automoblog and a member of the Midwest Automotive Media Association and the Society of Automotive Historians. He serves on the board of directors for the Ally Jolie Baldwin Foundation, is a past president of Detroit Working Writers, and a loyal Detroit Lions fan.

Original article: Autonomous Cars & Cognitive Brain Science: Is There a Missing Link?

from Automoblog

Read The Rest:automoblog...

Post a Comment